Okay, so, today I’m gonna walk you through my little project: a Paula Badosa prediction thingy. It’s nothing fancy, but I learned a ton, and maybe you can too.

The Idea

Basically, I wanted to see if I could build a simple model to predict whether Paula Badosa would win her next tennis match. I know, I know, super original. But hey, gotta start somewhere, right?

Getting the Data

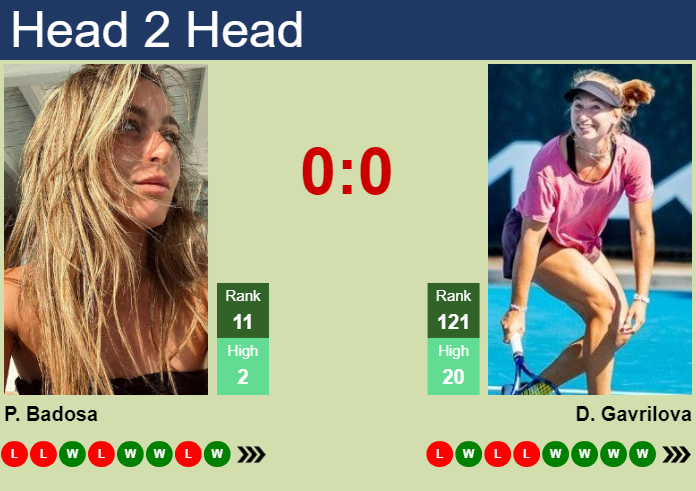

First things first, I needed data. Lots of it. I spent ages scraping match results from some tennis data websites. This part was a real pain because every site formats things differently. I ended up writing a bunch of custom scripts using Python and Beautiful Soup to grab the stats I needed: her win/loss record, her opponent’s win/loss record, their head-to-head record, surface type, ranking, and a few other bits and bobs.

Cleaning and Prepping

Data cleaning… Ugh. This took way longer than I thought. Missing values everywhere! I had to decide whether to fill them in (impute) or just toss the rows. In the end, I went with a bit of both. For really important stuff like rankings, I tried to find historical rankings. For less important things, I just used the average or the median. I also had to convert categorical data (like surface type) into numerical data (using one-hot encoding, I think it’s called). Basically, turned “clay”, “grass”, and “hard” into columns of 0s and 1s.

Building the Model

I decided to keep things simple and use a Logistic Regression model. I know, not the fanciest algorithm, but it’s easy to understand and quick to train. I used scikit-learn in Python. I split my data into a training set (80%) and a testing set (20%). Trained the model on the training data, then used the testing data to see how well it performed.

Evaluating the Model

The results were… okay. The accuracy was around 65%. Not amazing, but better than flipping a coin! I looked at the confusion matrix to see where the model was making mistakes. It seemed to have a harder time predicting upsets – when Badosa was the underdog, it often predicted she’d lose even when she won.

What I Learned

- Data cleaning is a HUGE part of any machine learning project. Seriously, spend more time on this than you think you need to.

- Feature engineering can make a big difference. I tried adding some new features (like the difference in ranking between Badosa and her opponent), and it slightly improved the model.

- There’s a ton more to learn! I only scratched the surface with Logistic Regression. I want to try more complex models like Random Forests or Gradient Boosting.

Next Steps

I’m planning to keep working on this. Here’s what I want to do next:

- Get more data! The more data, the better.

- Try different models and compare their performance.

- Add more features, like recent form and injury history.

- Maybe even try to predict the score of the match, not just the winner.

So yeah, that’s my Paula Badosa prediction project. It’s a work in progress, but I’m having fun with it. And hopefully, it’ll help me win some bets… just kidding (mostly)!